Connecting Data & Running Pods

The basic component of the Bitfount network is the Pod (Processor of data). Pods are co-located with data, check whether users are authorised to do given operations on the data, and then carry out any approved computation. A Pod must be created with connected data prior to any data analysis taking place.

When you create a Pod, you assign it metadata according to its contents. If you are getting started with production-ready data, it’s useful to understand your desired Pod metadata prior to setting up a Pod. For more details on possible Pod configuration parameters, see the API Reference.

There are three primary mechanisms for creating a Pod, which you can choose from based on your level of comfort with each method:

We've included examples of .csv file sources for Pod configurations below. If you don't see an example for your data source or want more information on best practices for a given data source, we have outlined Pod configuration for each data source type in more detail in Data Source Configuration Best Practices.

Given the federated nature of the Bitfount architecture, Pods can only be accessed if they are online. This means that a Data Scientist can only run tasks on the data in a Pod if the Pod is running on the Pod owner’s local machine or server at the time of submitting a query or task. As a result, Bitfount recommends that Pod owners create Pods that leverage always-on servers to reduce the need to work synchronously across teams and partners.

💡 Pro-Tip: To ensure authorised data scientists can access Pods at their convenience, create Pods linked to always-on machines. This includes cloud-based server instances, on-premise servers with guaranteed uptime, or hosted server solutions - all with access to their own local data sources.

YAML Configuration

Setting up a pod can be done through configuration using a yaml file along with the run_pod script. The format for executing this is:

bitfount run_pod <path_to_config.yaml>The configuration yaml file needs to follow the format specified in the PodConfig class.

CSV setup

Example yaml file format for .csv files:

pod_name: census-incomedatasource: CSVSourcepod_details: display_name: Census income demo pod description: > This pod contains data from the census-income public dataset, which includes data related to individual adults, their circumstances, and income.data_config: ignore_cols: ["fnlwgt"] force_stypes: census-income: categorical: ["TARGET", "workclass", "marital-status", "occupation", "relationship", "race", "native-country", "gender", "education"] datasource_args: path: <PATH TO CSV>/<filename>.csv seed: 100For yaml configuration examples for other data source types (i.e. postgres databases, Excel files, SQLite database files, etc.) see Data Source Configuration Best Practices.

Using the Bitfount Python API

Pods can be set up directly through the Python API, as shown in the Running a Pod Tutorial. We recommend using a python-compatible notebook such as Jupyter to organise Pod creation.

Prior to installing Bitfount, we recommend generating a virtual environment from which to orchestrate Pod creation and other Bitfount tasks. If you’re unfamiliar with this process, you can run the commands:

python -m venv {directory_for_venv}source {directory_for_venv}/bin/activateIf you’ve already installed Bitfount, activate your previously created virtual environment by running:

source {directory_for_venv}/bin/activateTo launch a Jupyter notebook (optional):

jupyter notebookOnce you’ve prepped your environment:

Import the relevant pieces. The classes illustrated below are the minimum required for running Pods. However, you may wish to include additional classes depending on what you plan to achieve. More details on available classes can be found in the API Reference.

import loggingimport nest_asynciofrom bitfount import Podfrom bitfount.runners.config_schemas import ( DataSplitConfig, PodConfig, PodDataConfig, PodDetailsConfig,)from bitfount.runners.utils import setup_loggersnest_asyncio.apply() # Needed because Jupyter also has an asyncio loopSet up the loggers, which are required to view status of your Pod:

loggers = setup_loggers([logging.getLogger("bitfount")])Create the

Podand configure it using thePodDetailsConfigandPodDataConfigclasses. The former is required to specify the display name and description of the Pod, whilst the latter is used to customise the schema and underlying data in the datasource (e.g. modifiers, semantic types of columns,DataSplitConfiginputs, etc.). For more information, refer to the config_schemas reference guide. Note, Pod names cannot include underscores.pod = Pod( name="my-pod", datasource=datasource, # see Data Source Configuration Best Practices guide for examples of each supported source type pod_details_config=PodDetailsConfig( display_name="Demo Pod", description="This pod is for demonstration purposes only", ), data_config=PodDataConfig( force_stypes={ "my-pod": { "categorical": ["target"], "image": ["file"] }, }, ),)Finally, start the pod by calling the

startmethod on the Podpod.start()

Using Docker

Instead of running the python script directly, you can also run it via Docker using the Pod docker image hosted on GitHub.

The image requires a config.yaml file, which follows the same format as the yaml used by the run_pod script as shown above. By default the Docker image will try to load it from /mount/config/config.yaml inside the Docker container.

You can provide this file in one of two ways:

- Mounting/binding a volume to the container. Exactly how you do this may vary depending on your platform/environment (Docker/docker-compose/ECS).

- Copy a config file into a stopped container using docker cp.

- CSV or Excel: If you're using a CSV or Excel data source then you'll need to mount your data to the container. You will need to specify the path to mount in your config. For simplicity it's easiest to put your config and your CSV in the same directory and then mount it to the container.

- Database: If you're using a Database as your data source, then you'll need to make sure you've exported the relevant environment variables. These are specified in the previous section.

Once your container is running you will need to check the container logs and complete the login step, allowing your container to authenticate with Bitfount. The process is the same as when running locally (e.g. the tutorials), except that we can't open the login page automatically for you.

Configuring GPU support

If you are using a PyTorch model, the GPUs are automatically configured under the hood (using PyTorch Lightning). Bitfount currently supports single GPU processing. The important thing is to ensure you have the correct version of PyTorch installed. The PyTorch maintainers provide a useful guide for ensuring this at https://pytorch.org/get-started/locally/

If running through Docker, you will need to also ensure that the Docker image has access to the GPU!

Running Pods

Once you’ve created and authorised a Pod, you’ll need to ensure it is online before you or a collaborator can query or train models on the dataset associated to the Pod. For this reason, we recommend associating a Pod with an always-on machine rather than your local machine. This will ensure collaborators can work asynchronously and reduces communication required between parties. In order to get a Pod up and running or re-start an offline Pod, you can:

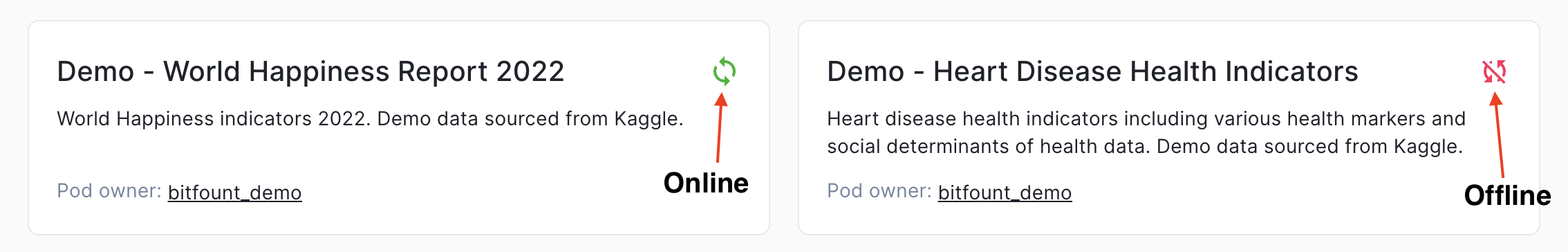

- Check if a Pod is online in the Bitfount Hub by going to “My Pods” and searching for it. You can tell if a Pod is online or offline based on the icon in its card:

- If a Pod is offline, you can re-start it by recreating the Pod as outlined in the steps above.

- Confirm the Pod is back online in the Bitfount Hub. At this stage, you may wish to notify your collaborators that the Pod is ready 🎉.

Next Steps

Congrats, you’ve successfully configured a Pod! You are now ready to authorise the Pod for yourself or your partners. For detailed instructions, see Authorising Pods.